Hugo interactive post search script

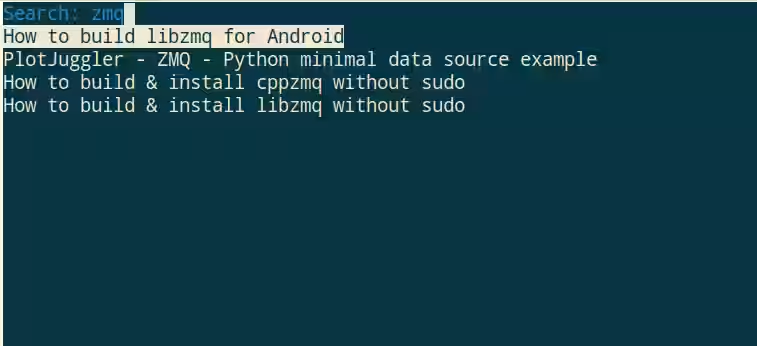

The following script provides interactive search functionality for Hugo posts. It allows you to search for posts by title and provides an interactive, ncurses-based interface to navigate through the search results.

Upon pressing Enter, the script copies a Markdown link to the clipboard, which can be pasted into your Hugo post.

hugo_post_search.py

#!/usr/bin/env python3

"""

Hugo Blog Post Search Tool

A ncurses-based tool for searching through Hugo blog posts, generating URLs,

and copying markdown links to clipboard.

"""

import os

import sys

import json

import re

import curses

import argparse

import hashlib

from datetime import datetime

from pathlib import Path

from typing import Dict, List, Tuple, Optional

import subprocess

try:

import pyperclip

except ImportError:

pyperclip = None

class HugoPost:

"""Represents a Hugo blog post with metadata."""

def __init__(self, filepath: Path):

self.filepath = filepath

self.title = ""

self.slug = ""

self.date = None

self.url = ""

self.mtime = 0

def parse_frontmatter(self) -> bool:

"""Parse the YAML frontmatter of the post."""

try:

with open(self.filepath, 'r', encoding='utf-8') as f:

content = f.read()

# Extract frontmatter

if not content.startswith('---'):

return False

end_marker = content.find('---', 3)

if end_marker == -1:

return False

frontmatter = content[3:end_marker].strip()

# Parse title

title_match = re.search(r'^title:\s*["\']?(.*?)["\']?\s*$', frontmatter, re.MULTILINE)

if title_match:

self.title = title_match.group(1).strip()

# Parse slug

slug_match = re.search(r'^slug:\s*["\']?(.*?)["\']?\s*$', frontmatter, re.MULTILINE)

if slug_match:

self.slug = slug_match.group(1).strip()

# Parse date

date_match = re.search(r'^date:\s*["\']?(.*?)["\']?\s*$', frontmatter, re.MULTILINE)

if date_match:

date_str = date_match.group(1).strip()

try:

# Try different date formats

for fmt in ['%Y-%m-%d', '%Y-%m-%dT%H:%M:%S', '%Y-%m-%dT%H:%M:%SZ']:

try:

self.date = datetime.strptime(date_str.split('T')[0] if 'T' in date_str else date_str, '%Y-%m-%d')

break

except ValueError:

continue

except:

pass

# Get file modification time

self.mtime = os.path.getmtime(self.filepath)

# Generate URL

if self.date and self.slug:

self.url = f"/{self.date.year:04d}/{self.date.month:02d}/{self.date.day:02d}/{self.slug}/"

return bool(self.title and self.slug and self.date)

except Exception as e:

return False

class PostCache:

"""Manages caching of Hugo posts."""

def __init__(self, posts_dir: Path, cache_file: Path):

self.posts_dir = posts_dir

self.cache_file = cache_file

self.posts: List[HugoPost] = []

def get_directory_hash(self) -> str:

"""Get a hash of the posts directory state."""

hash_obj = hashlib.md5()

if not self.posts_dir.exists():

return hash_obj.hexdigest()

for md_file in sorted(self.posts_dir.glob('**/*.md')):

try:

stat = md_file.stat()

hash_obj.update(f"{md_file}:{stat.st_mtime}:{stat.st_size}".encode())

except:

continue

return hash_obj.hexdigest()

def load_cache(self) -> bool:

"""Load posts from cache if valid."""

if not self.cache_file.exists():

return False

try:

with open(self.cache_file, 'r', encoding='utf-8') as f:

cache_data = json.load(f)

# Check if cache is still valid

if cache_data.get('directory_hash') != self.get_directory_hash():

return False

# Load posts from cache

self.posts = []

for post_data in cache_data.get('posts', []):

post = HugoPost(Path(post_data['filepath']))

post.title = post_data['title']

post.slug = post_data['slug']

if post_data['date']:

post.date = datetime.fromisoformat(post_data['date'])

post.url = post_data['url']

post.mtime = post_data['mtime']

self.posts.append(post)

return True

except Exception as e:

return False

def save_cache(self):

"""Save posts to cache."""

posts_data = []

for post in self.posts:

post_data = {

'filepath': str(post.filepath),

'title': post.title,

'slug': post.slug,

'date': post.date.isoformat() if post.date else None,

'url': post.url,

'mtime': post.mtime

}

posts_data.append(post_data)

cache_data = {

'directory_hash': self.get_directory_hash(),

'posts': posts_data

}

# Ensure cache directory exists

self.cache_file.parent.mkdir(parents=True, exist_ok=True)

with open(self.cache_file, 'w', encoding='utf-8') as f:

json.dump(cache_data, f, indent=2)

def scan_posts(self):

"""Scan posts directory and build cache."""

self.posts = []

if not self.posts_dir.exists():

return

for md_file in self.posts_dir.glob('**/*.md'):

post = HugoPost(md_file)

if post.parse_frontmatter():

self.posts.append(post)

# Sort by date (newest first)

self.posts.sort(key=lambda p: p.date or datetime.min, reverse=True)

def get_posts(self) -> List[HugoPost]:

"""Get all posts, loading from cache or scanning if needed."""

if not self.load_cache():

self.scan_posts()

self.save_cache()

return self.posts

class PostSearchUI:

"""Ncurses-based search interface for Hugo posts."""

def __init__(self, posts: List[HugoPost]):

self.posts = posts

self.filtered_posts = posts[:]

self.search_term = ""

self.selected_index = 0

self.scroll_offset = 0

def filter_posts(self, search_term: str):

"""Filter posts based on search term."""

if not search_term.strip():

self.filtered_posts = self.posts[:]

else:

term_lower = search_term.lower()

self.filtered_posts = [

post for post in self.posts

if term_lower in post.title.lower() or term_lower in post.slug.lower()

]

# Reset selection

self.selected_index = 0

self.scroll_offset = 0

def copy_to_clipboard(self, text: str) -> bool:

"""Copy text to clipboard."""

if pyperclip:

try:

pyperclip.copy(text)

return True

except:

pass

# Fallback: try using system commands

try:

# Try xclip (Linux)

subprocess.run(['xclip', '-selection', 'clipboard'],

input=text.encode(), check=True)

return True

except:

try:

# Try pbcopy (macOS)

subprocess.run(['pbcopy'], input=text.encode(), check=True)

return True

except:

pass

return False

def run(self, stdscr):

"""Run the ncurses interface."""

# Initialize colors

curses.curs_set(1) # Show cursor

curses.init_pair(1, curses.COLOR_BLACK, curses.COLOR_WHITE) # Selected

curses.init_pair(2, curses.COLOR_BLUE, curses.COLOR_BLACK) # Search

while True:

height, width = stdscr.getmaxyx()

stdscr.clear()

# Draw search line

search_line = f"Search: {self.search_term}"

stdscr.addstr(0, 0, search_line[:width-1], curses.color_pair(2))

# Draw posts list

visible_height = height - 2

start_idx = self.scroll_offset

end_idx = min(start_idx + visible_height, len(self.filtered_posts))

for i in range(start_idx, end_idx):

row = i - start_idx + 1

if row >= height - 1:

break

post = self.filtered_posts[i]

title = post.title[:width-1]

if i == self.selected_index:

stdscr.addstr(row, 0, title, curses.color_pair(1))

else:

stdscr.addstr(row, 0, title)

# Show status line

if self.filtered_posts:

status = f"{len(self.filtered_posts)} posts found"

if len(self.filtered_posts) > visible_height:

status += f" (showing {start_idx+1}-{end_idx})"

else:

status = "No posts found"

if height > 1:

stdscr.addstr(height-1, 0, status[:width-1])

# Position cursor in search field

cursor_pos = min(len(search_line), width-1)

stdscr.move(0, cursor_pos)

stdscr.refresh()

# Handle input

key = stdscr.getch()

if key == 27: # Escape

return None

elif key in (10, 13): # Enter

if self.filtered_posts and 0 <= self.selected_index < len(self.filtered_posts):

selected_post = self.filtered_posts[self.selected_index]

markdown_link = f"[{selected_post.title}]({selected_post.url})"

return markdown_link

elif key == curses.KEY_UP:

if self.selected_index > 0:

self.selected_index -= 1

if self.selected_index < self.scroll_offset:

self.scroll_offset = self.selected_index

elif key == curses.KEY_DOWN:

if self.selected_index < len(self.filtered_posts) - 1:

self.selected_index += 1

if self.selected_index >= self.scroll_offset + visible_height:

self.scroll_offset = self.selected_index - visible_height + 1

elif key == curses.KEY_BACKSPACE or key == 127:

if self.search_term:

self.search_term = self.search_term[:-1]

self.filter_posts(self.search_term)

elif 32 <= key <= 126: # Printable characters

self.search_term += chr(key)

self.filter_posts(self.search_term)

def main():

parser = argparse.ArgumentParser(description='Hugo Blog Post Search Tool')

parser.add_argument('--posts-dir', type=Path, default=Path('content/post'),

help='Path to Hugo posts directory (default: content/post)')

parser.add_argument('--cache-file', type=Path, default=Path('.hugo_post_cache.json'),

help='Path to cache file (default: .hugo_post_cache.json)')

args = parser.parse_args()

# Initialize cache

cache = PostCache(args.posts_dir, args.cache_file)

try:

posts = cache.get_posts()

except Exception as e:

print(f"Error loading posts: {e}", file=sys.stderr)

return 1

if not posts:

print("No valid Hugo posts found.", file=sys.stderr)

return 1

# Initialize and run UI

ui = PostSearchUI(posts)

try:

result = curses.wrapper(ui.run)

if result:

print(result)

if ui.copy_to_clipboard(result):

print("Copied to clipboard!", file=sys.stderr)

else:

print("Could not copy to clipboard. Install pyperclip or xclip/pbcopy.", file=sys.stderr)

return 0

except KeyboardInterrupt:

return 1

except Exception as e:

print(f"Error: {e}", file=sys.stderr)

return 1

if __name__ == '__main__':

sys.exit(main())If this post helped you, please consider buying me a coffee or donating via PayPal to support research & publishing of new posts on TechOverflow